Quantum Computing -- An Update

In March 2022 I wrote a description of the Quantum Technology Ecosystem. I thought this would be a good time to check in on the progress of building a quantum computer and explain more of the basics.

Just as a reminder, Quantum technologies are used in three very different and distinct markets:

Quantum Computing, Quantum Communications and Quantum Sensing and Metrology. If you don’t know the difference between a qubit and cueball, (I didn’t) read the tutorial here.

Summary –

There’s been incremental technical progress in making physical qubits

There is no clear winner yet between the seven approaches in building qubits

Reminder – why build a quantum computer?

How many physical qubits do you need?

Advances in materials science will drive down error rates

Regional research consortiums

Venture capital investment FOMO and financial engineering

We talk a lot about qubits in this post. As a reminder a qubit – is short for a quantum bit. It is a quantum computing element that leverages the principle of superposition (that quantum particles can exist in many possible states at the same time) to encode information via one of four methods: spin, trapped atoms and ions, photons, or superconducting circuits.

Incremental Technical Progress

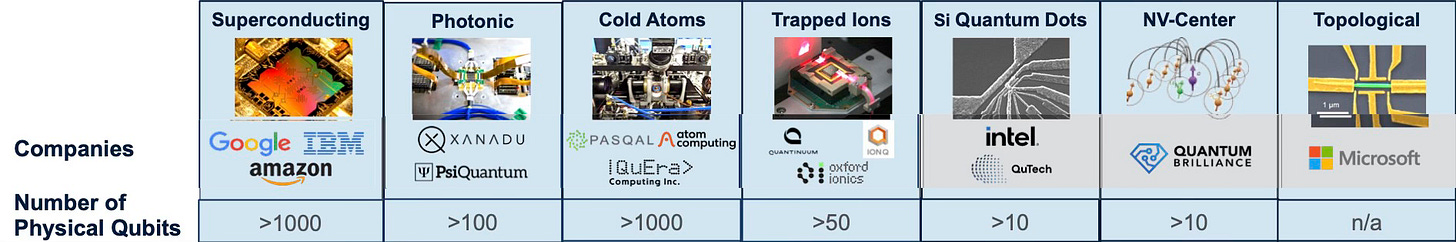

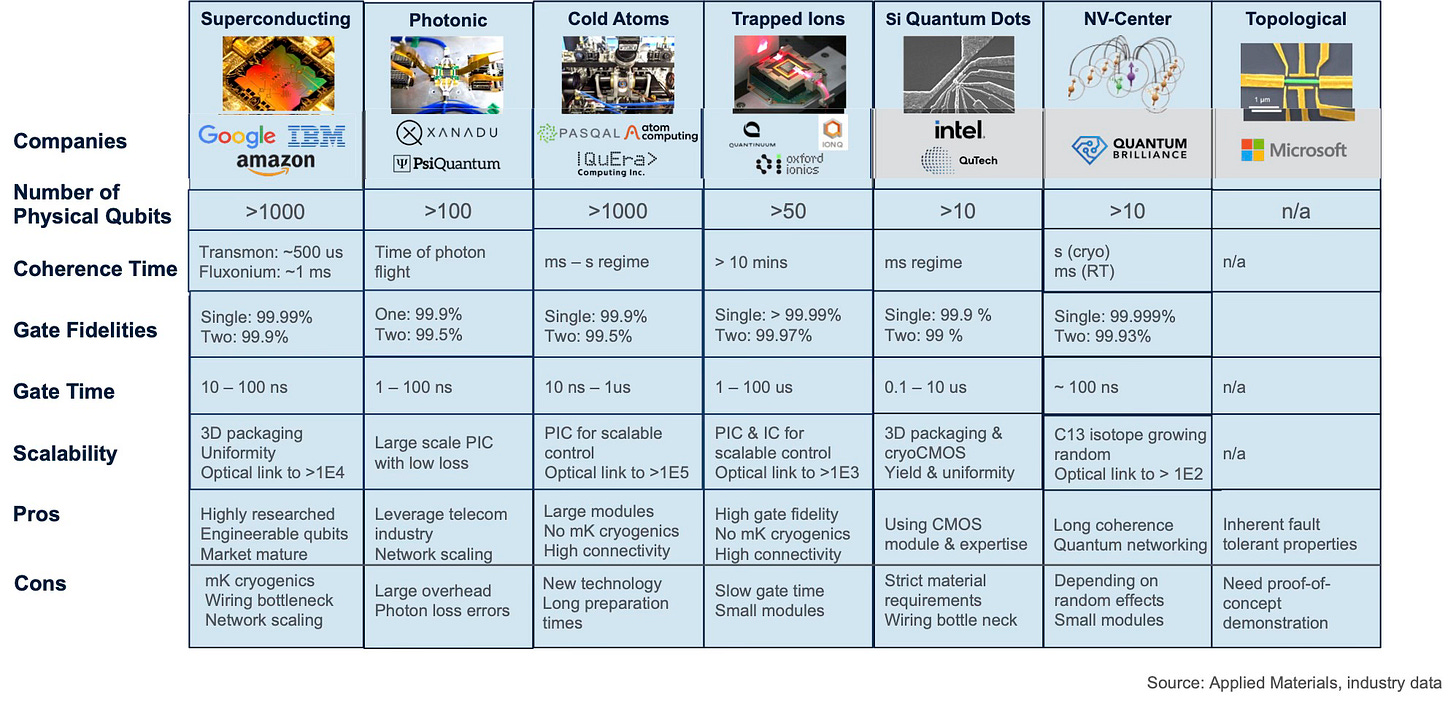

As of 2024 there are seven different approaches being explored to build physical qubits for a quantum computer. The most mature currently are Superconducting, Photonics, Cold Atoms, Trapped Ions. Other approaches include Quantum Dots, Nitrogen Vacancy in Diamond Centers, and Topological. All these approaches have incrementally increased the number of physical qubits.

These multiple approaches are being tried, as there is no consensus to the best path to building logical qubits. Each company believes that their technology approach will lead them to a path to scale to a working quantum computer.

Every company currently hypes the number of physical qubits they have working. By itself this is a meaningless number to indicate progress to a working quantum computer. What matters is the number of logical qubits.

Reminder – Why Build a Quantum Computer?

One of the key misunderstandings about quantum computers is that they are faster than current classical computers on all applications. That’s wrong. They are not. They are faster on a small set of specialized algorithms. These special algorithms are what make quantum computers potentially valuable. For example, running Grover’s algorithm on a quantum computer can search unstructured data faster than a classical computer. Further, quantum computers are theoretically very good at minimization / optimizations /simulations…think optimizing complex supply chains, energy states to form complex molecules, financial models (looking at you hedge funds,) etc.

It’s possible that quantum computers will be treated as “accelerators” to the overall compute workflows – much like GPUs today. In addition, several companies are betting that “algorithmic” qubits (better than “noisy” but worse than “error-corrected”) may be sufficient to provide some incremental performance to workflows lie simulating physical systems. This potentially opens the door for earlier cases of quantum advantage.

However, while all of these algorithms might have commercial potential one day, no one has yet to come up with a use for them that would radically transform any business or military application. Except for one – and that one keeps people awake at night. It’s Shor’s algorithm for integer factorization – an algorithm that underlies much of existing public cryptography systems.

The security of today’s public key cryptography systems rests on the assumption that breaking into those keys with a thousand or more digits is practically impossible. It requires factoring large prime numbers (e.g., RSA) or elliptic curve (e.g., ECDSA, ECDH) or finite fields (DSA) that can’t be done with any type of classic computer regardless of how large. Shor’s factorization algorithm can crack these codes if run on a Quantum Computer. This is why NIST has been encouraging the move to Post-Quantum / Quantum-Resistant Codes.

How many physical qubits do you need for one logical qubit?

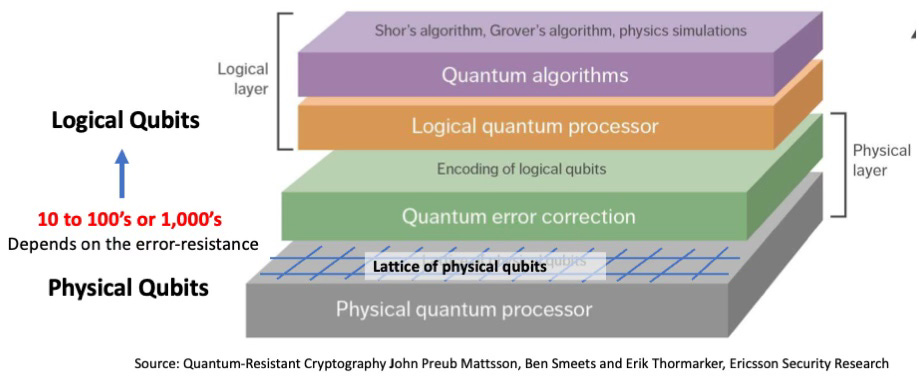

Thousands of logical qubits are needed to create a quantum computer that can run these specialized applications. Each logical qubit is constructed out of many physical qubits. The question is, how many physical qubits are needed? Herein lies the problem.

Unlike traditional transistors in a microprocessor that once manufactured always work, qubits are unstable and fragile. They can pop out of a quantum state due to noise, decoherence (when a qubit interacts with the environment,) crosstalk (when a qubit interacts with a physically adjacent qubit,) and imperfections in the materials making up the quantum gates. When that happens errors will occur in quantum calculations. So to correct for those error you need lots of physical qubits to make one logical qubit.

So how do you figure out how many physical qubits you need?

You start with the algorithm you intend to run.

Different quantum algorithms require different numbers of qubits. Some algorithms (e.g., Shor’s prime factoring algorithm) may need >5,000 logical qubits (the number may turn out to be smaller as researchers think of how to use fewer logical qubits to implement the algorithm.)

Other algorithms (e.g., Grover’s algorithm) require fewer logical qubits for trivial demos but need 1000’s of logical qubits to see an advantage over linear search running on a classical computer. (See here, here and here for other quantum algorithms.)

Measure the physical qubit error rate.

Therefore, the number of physical qubits you need to make a single logical qubit starts by calculating the physical qubit error rate (gate error rates, coherence times, etc.) Different technical approaches (superconducting, photonics, cold atoms, etc.) have different error rates and causes of errors unique to the underlying technology.

Current state-of-the-art quantum qubits have error rates that are typically in the range of 1% to 0.1%. This means that on average one out of every 100 to one out of 1000 quantum gate operations will result in an error. System performance is limited by the worst 10% of the qubits.

Choose a quantum error correction code

To recover from the error prone physical qubits, quantum error correction encodes the quantum information into a larger set of physical qubits that are resilient to errors. Surface Codes is the most commonly proposed error correction code. A practical surface code uses hundreds of physical qubits to create a logical qubit. Quantum error correction codes get more efficient the lower the error rates of the physical qubits. When errors rise above a certain threshold, error correction fails, and the logical qubit becomes as error prone as the physical qubits.

The Math

To factor a 2048-bit number using Shor’s algorithm with a 10-2 (1% per physical qubit) error rate:

Assume we need ~5,000 logical qubits

With an error rate of 1% the surface error correction code requires ~ 500 physical qubits required to encode one logical qubit. (The number of physical qubits required to encode one logical qubit using the Surface Code depends on the error rate.)

Physical cubits needed for Shor’s algorithm= 500 x 5,000 = 2.5 million

If you could reduce the error rate by a factor of 10 – to 10-3 (0.1% per physical qubit,)

Because of the lower error rate, the surface code would only need ~ 100 physical qubits to encode one logical qubit

Physical cubits needed for Shor’s algorithm= 100 x 5,000 = 500 thousand

In reality there another 10% or so of ancillary physical bits needed for overhead. And no one yet knows the error rate in wiring multiple logical bits together via optical links or other technologies.

(One caveat to the math above. It assumes that every technical approach (Superconducting, Photonics, Cold Atoms, Trapped Ions, et al) will require each physical qubit to have hundreds of bits of error correction to make a logical qubit. There is always a chance a breakthrough could create physical qubits that are inherently stable, and the number of error correction qubits needed drops substantially. If that happens, the math changes dramatically for the better and quantum computing becomes much closer.)

Today, the best anyone has done is to create 1,000 physical qubits.

We have a ways to go.

Advances in materials science will drive down error rates

As seen by the math above, regardless of the technology in creating physical qubits (Superconducting, Photonics, Cold Atoms, Trapped Ions, et al.) reducing errors in qubits can have a dramatic effect on how quickly a quantum computer can be built. The lower the physical qubit error rate, the fewer physical qubits needed in each logical qubit.

The key to this is materials engineering. To make a system of 100s of thousands of qubits work the qubits need to be uniform and reproducible. For example, decoherence errors are caused by defects in the materials used to make the qubits. For superconducting qubits that requires uniform thickness, controlled grain size, and roughness. Other technologies require low loss, and uniformity. All of the approaches to building a quantum computer require engineering exotic materials at the atomic level – resonators using tantalum on silicon, Josephson junctions built out of magnesium diboride, transition-edge sensors, Superconducting Nanowire Single Photon Detectors, etc.

Materials engineering is also critical in packaging these qubits (whether it’s superconducting or conventional packaging) and to interconnect 100s of thousands of qubits, potentially with optical links. Today, most of the qubits being made are on legacy 200mm or older technology in hand-crafted processes. To produce qubits at scale, modern 300mm semiconductor technology and equipment will be required to create better defined structures, clean interfaces, and well-defined materials. There is an opportunity to engineer and build better fidelity qubits with the most advanced semiconductor fabrication systems so the path from R&D to high volume manufacturing is fast and seamless.

There are likely only a handful of companies on the planet that can fabricate these qubits at scale.

Regional research consortiums

Two U.S. states; Illinois and Colorado are vying to be the center of advanced quantum research.

Illinois Quantum and Microelectronics Park (IQMP)

Illinois has announced the Illinois Quantum and Microelectronics Park initiative, in collaboration with DARPA’s Quantum Proving Ground (QPG) program, to establish a national hub for quantum technologies. The State approved $500M for a “Quantum Campus” and has received $140M+ from DARPA with the state of Illinois matching those dollars.

Elevate Quantum

Elevate Quantum is the quantum tech hub for Colorado, New Mexico, and Wyoming. The consortium was awarded $127m from the Federal and State Governments – $40.5 million from the Economic Development Administration (part of the Department of Commerce) and $77m from the State of Colorado and $10m from the State of New Mexico.

(The U.S. has a National Quantum Initiative (NQI) to coordinate quantum activities across the entire government see here.)

Venture capital investment, FOMO, and financial engineering

Venture capital has poured billions of dollars into quantum computing, quantum sensors, quantum networking and quantum tools companies.

However, regardless of the amount of money raised, corporate hype, pr spin, press releases, public offerings, no company is remotely close to having a quantum computer or even being close to run any commercial application substantively faster than on a classical computer.

So why all the investment in this area?

FOMO – Fear Of Missing Out. Quantum is a hot topic. This U.S. government has declared quantum of national interest. If you’re a deep tech investor and you don’t have one of these companies in your portfolio it looks like you’re out of step.

It’s confusing. The possible technical approaches to creating a quantum computer – Superconducting, Photonics, Cold Atoms, Trapped Ions, Quantum Dots, Nitrogen Vacancy in Diamond Centers, and Topological – create a swarm of confusing claims. And unless you or your staff are well versed in the area, it’s easy to fall prey to the company with the best slide deck.

Financial engineering. Outsiders confuse a successful venture investment with companies that generate lots of revenue and profit. That’s not always true.

Often, companies in a “hot space” (like quantum) can go public and sell shares to retail investors who have almost no knowledge of the space other than the buzzword. If the stock price can stay high for 6 months the investors can sell their shares and make a pile of money regardless of what happens to the company.

The track record so far of quantum companies who have gone public is pretty dismal. Two of them are on the verge of being delisted.

Here are some simple questions to ask companies building quantum computers:

What is their current error rates?

What error correction code will they use?

Given their current error rates, how many physical qubits are needed to build one logical qubit?

How will they build and interconnect the number of physical qubits at scale?

What number of qubits do they think is need to run Shor’s algorithm to factor 2048 bits.

How will the computer be programmed? What are the software complexities?

What are the physical specs – unique hardware needed (dilution cryostats, et al) power required, connectivity, etc.

Lessons Learned

Lots of companies

Lots of investment

Great engineering occurring

Improvements in quantum algorithms may add as much (or more) to quantum computing performance as hardware improvements

The winners will be the one who master material engineering and interconnects

Jury is still out on all bets

Update: the kind folks at Applied Materials pointed me to the original 2012 Surface Codes paper. They pointed out that the math should look more like:

To factor a 2048-bit number using Shor’s algorithm with a 0.3% error rate (Google’s current quantum processor error rate)

Assume we need ~ 2,000 (not 5,000) logical qubits to run Shor’s algorithm.

With an error rate of 0.3% the surface error correction code requires ~ 10 thousand physical qubits to encode one logical qubit to achieve 10^-10 logical qubit error rate.

Physical cubits needed for Shor’s algorithm= 10,000 x 2,000 = 20 million

Still pretty far away from the 1,000 qubits we currently can achieve.

For those so inclined…

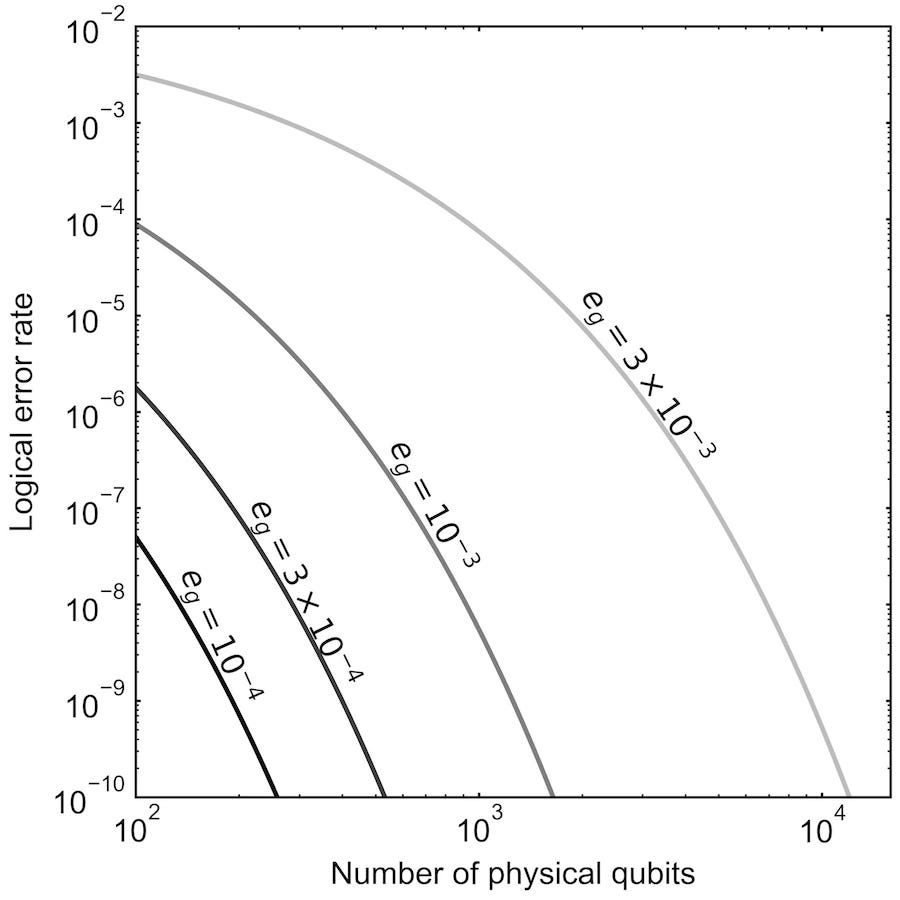

The logical qubit error rate P_L is P_L = 0.03 (p/p_th)^((d+1)/2), where p_th ~ 0.6% is the error rate threshold for surface codes, p the physical qubit error rate, and d is the size of the code, which is related to the number of the physical qubits: N = (2d – 1)^2.

See the plot below for P_L versus N for different physical qubit error rate for reference.

Steve is one of the very few futurists I follow, simply because of how often he is correct in his prognostications. Good read.

The nexus between Quantum Supremacy and AGI is what I am on the lookout for, and this gives a good status update on the quantum side. This is a good primer for those that are not up to speed. I expect Topological QC to help out drastically on the error limitation efforts. MS is exploring that aspect heavily.